new directors showcase

An Artificial Intelligence Music Video

Made over seven years ago.

Made over seven years ago.

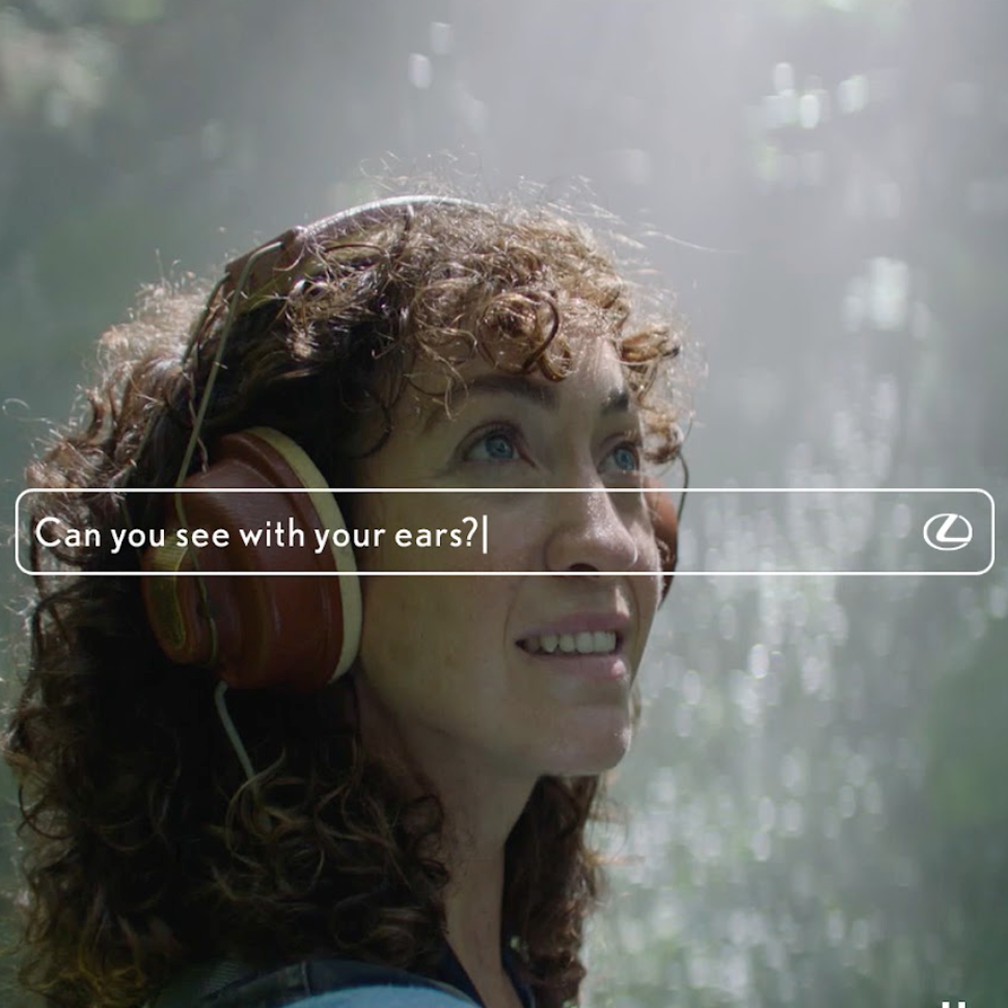

For the 2016 New Directors showcase at the Cannes advertising festival in 2016 we asked the simple question; can machines be creative?

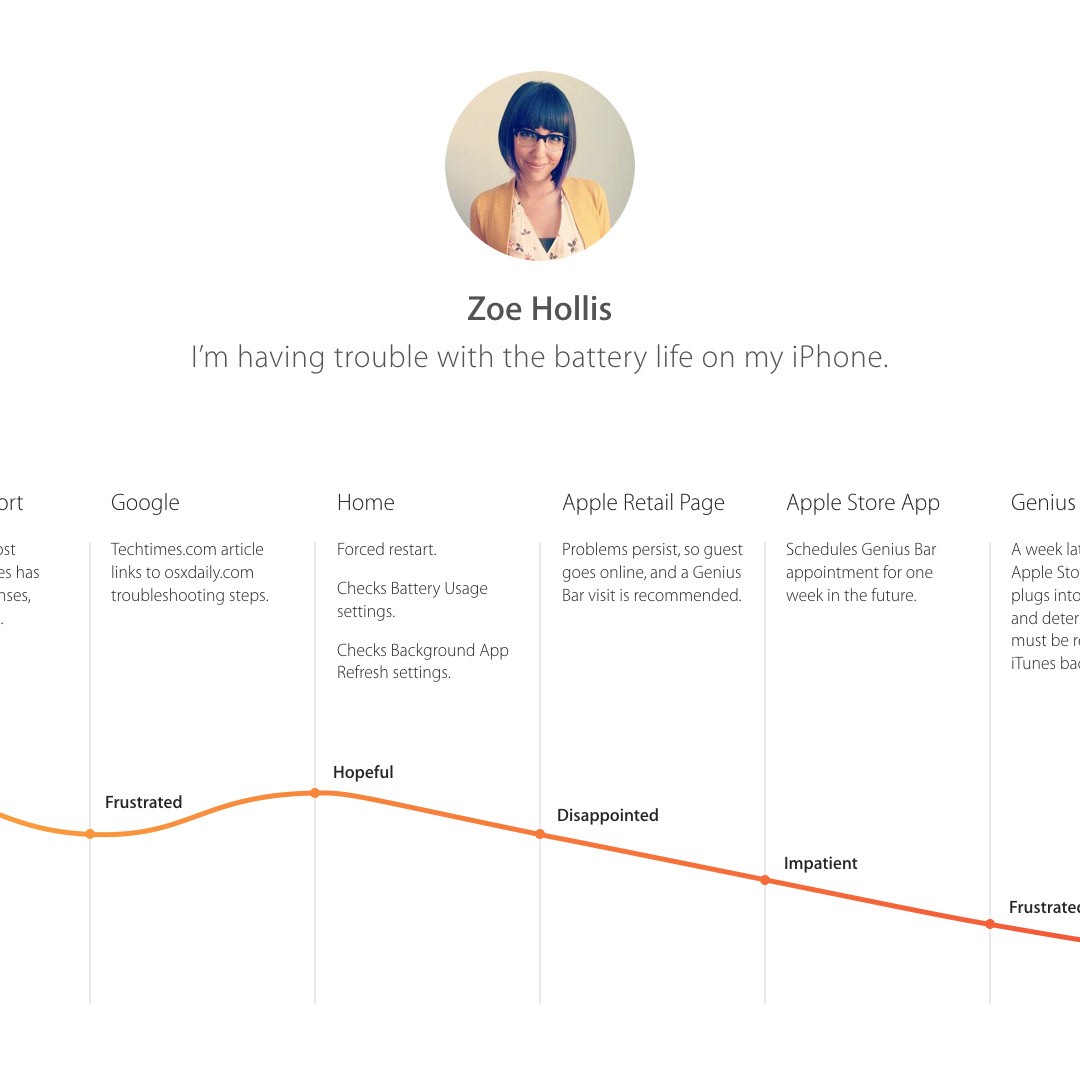

The AI director featured in the 2016 New Directors’ Showcase goes under the pseudonym of Anni Mathison, and the result of the experiment commissioned by Saatchi & Saatchi and Team One is a short film named “Eclipse,” conceived, edited and directed by machines.

The short film debuts on the anniversary of scientist Alan Mathison Turing’s birth on 23 June 1912. Turing was the first to ask the question, “Can machines think?”, which then leads immediately to the question can machines be creative?

“Eclipse” uses several technologies in a never-before-seen combination to create the film from start to finish:

IBM Watson and Microsoft’s AI chatbot Ms_Rinna (Microsoft Rinna) registered the emotion behind the lyrics to generate a completely original storyline for the music video.

IBM Watson and Microsoft’s AI chatbot Ms_Rinna (Microsoft Rinna) registered the emotion behind the lyrics to generate a completely original storyline for the music video.

In addition to helping provide the storyline, Ms_Rinna was asked opinions on characters, wardrobe, location and catering for the shoot.

The team used Affectiva’s facial recognition software and EEG data to help cast the perfect co-star.

Drones gave direction on the day of the shoot by using a combination of data from IBM Watson’s tone analysis and Affectiva’s facial recognition software. This data allowed the drones to capture intense emotional moments with mathematical precision.

Drones gave direction on the day of the shoot by using a combination of data from IBM Watson’s tone analysis and Affectiva’s facial recognition software. This data allowed the drones to capture intense emotional moments with mathematical precision.

AI was used again during the edit. The team created a proprietary program that identified which clips to put where based on the beat of the song and the emotional intent of the lyrics.

All of the visual effects were created using a custom neural art program. This program allowed the machines to apply a filter to the raw footage based on reference images selected based on the artist’s vision.

Case Study

The Project Film

The Music Video

Selling in the idea

Press & Features

Not available anymore in the US unfortunately but this BBC technology program came out to LA to feature us and the film we made.

We also travelled around the world talking about this project including talks at SXSW in Austin, Viva Technology in Paris and several panels in LA and NYC.

Screen Grabs

Here's some selected screen grabs of the AI generated neural art. We used shots from Nasa's achieves for the 'style' content of Mai Lan/the moon and landscape frames from Mad Max 4 for Michael/the sun.

With so much technology involved in our experimental shoot and with no direct human feedback/input I spent some of my time documenting the process for our behind scenes documentary.